Claude Sonnet Is Teaching Me English

Today, even a lazy after-thought can become a useful project

Marco Giancotti,

Marco Giancotti,

Cover image:

Children Teaching a Cat to Read, Jan Steen

Once upon a time I published my English mistakes for the whole world to see. Those were simpler, wilder days. Then language models, far more capable with English than I, took on the role of proofreaders of every blog post I wrote. I would write my thing, polish it to the best of my abilities, then hand it over to GPT-4.1 and friends for a final brush-up of the prose. For a while, I thought that was all I needed.

The next phase seems inevitable in retrospect: instead of fixing my text, AI should be fixing me. Teach a man to fish, the saying goes. Humans are the root of all problems and must be optimized away.

So I built (well, I had Claude Sonnet 4 build) an ultra-customized English improvement system based only on my own specific mistakes.

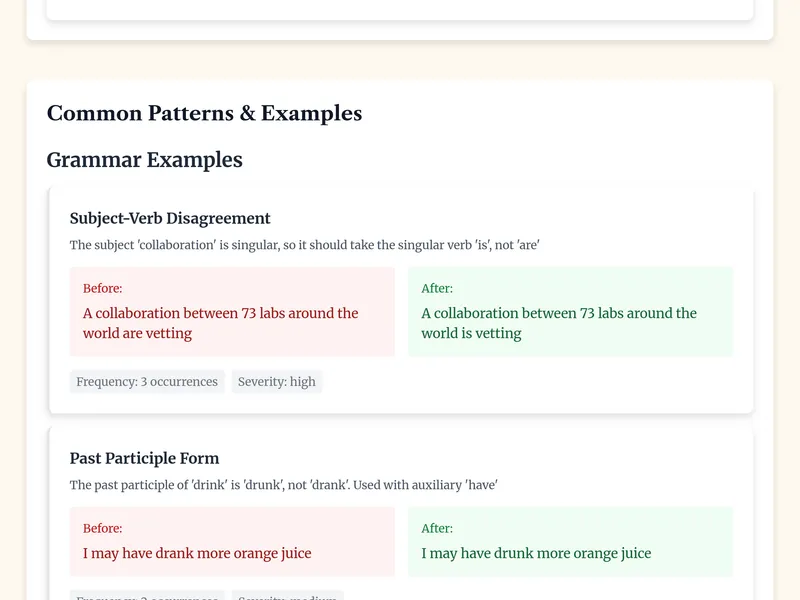

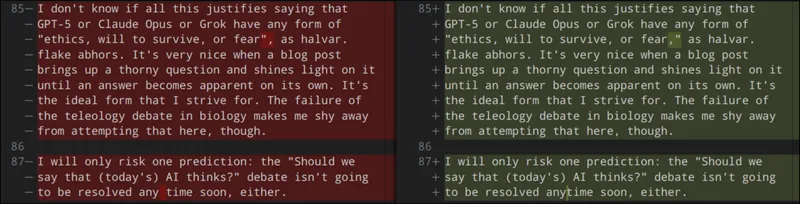

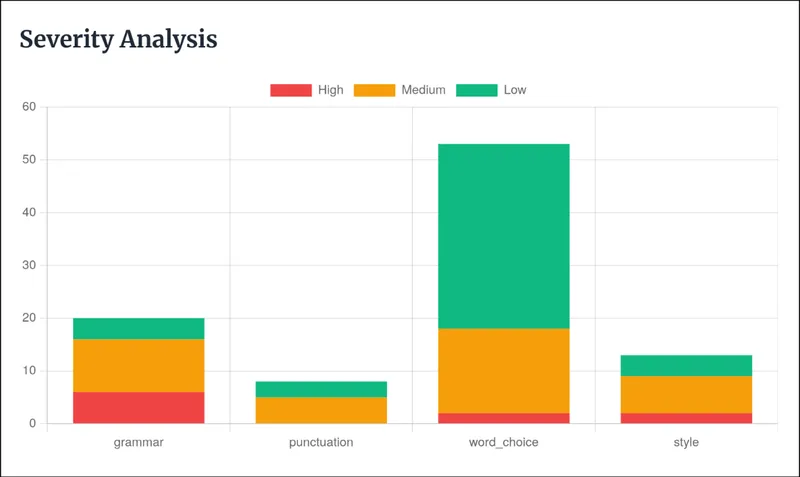

It takes the entire history of proofreading corrections that AI models applied to my blog posts in the past 6 months, and analyzes the hell out of them. Then it presents the results to me like this:

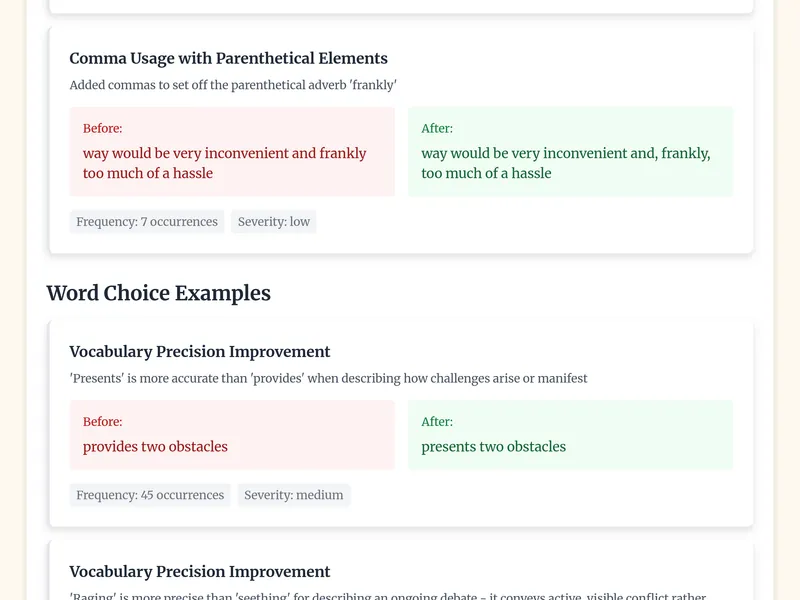

This kind of analysis is feasible because I write my posts in Markdown, a code-based text markup language. While writing, my text looks like this:

Words follow each other, and those that I want to italicize I simply surround with *single asterisks*, those I want to bold (embolden?) I surround with **double asterisks**.

Lists are just:

- Hyphens...

1. or numbers I type...

2. directly into the text editor

And so on, with a small number of other formatting conventions like that, entirely based on typing special characters with my clumsy fingers.

This means that I can write everything in plain-text files in a standard code editor, and all the amazing AI coding tools can access, edit, and interact in various ways with everything I write. I also get to keep a complete and exact version control history of every single change applied to any of the files in the website.

For this reason, the AI has full access to a catalog of all of my mistakes in a before/after format:

This makes it easy to create a processing pipeline that uses AI to categorize and explain the mistakes, then some old-fashioned code to tally up the numbers, then other AI calls to pick representative examples. Everything is then put into a web page available only to myself.

I plan to run this again periodically, to see if the number and distribution of mistakes evolves over time as I try to fix my English.

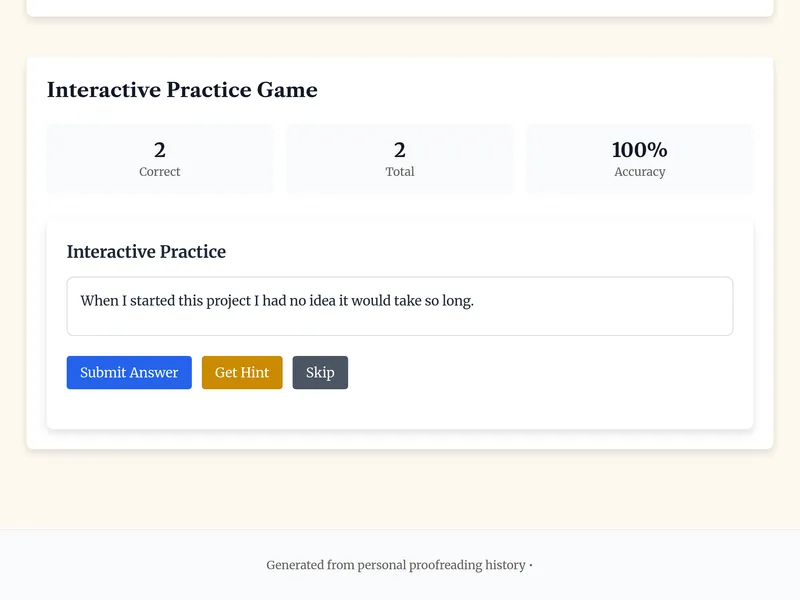

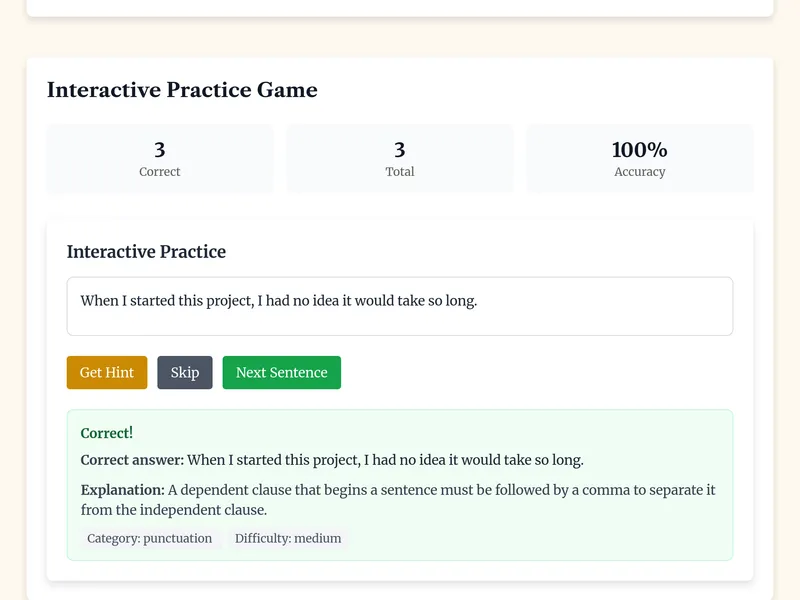

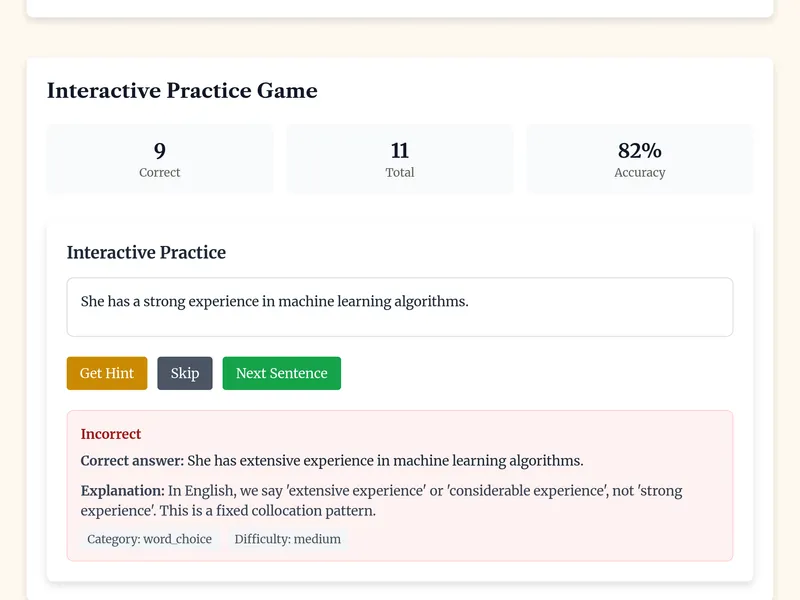

While I was at it, I also asked Claude to create a mini-game to test me on my weakest points:

This was a quick experiment, and I can think of many ways to improve this system. For example, the generated exercises are based on my own mistakes, but some of them tend to be either too easy or almost impossible (I had to ask it not to give me word-choice problems, because they are too open and subjective). This could be improved with some more prompt engineering. Better still, I could build interactive LLM evaluation and feedback straight into the interface with a little more work.

But that's not really what I want to show you. Here is what: a new way to use computers is becoming possible with AI agents.

I only spend $20 per month on my Claude subscription for other reasons, and this whole exercise took less than 2 hours of my time, spread over a couple of days. Its usefulness, however, might extend for months or years. And this is only an easy example to share: lately I'm building this kind of ad-hoc AI-enhanced solutions to the little inconveniences in my life all the time.

Before AI, I would never have dreamed of building a tailor-made system like this. Not because it can't be done without AI (it can), nor because I couldn't write the code myself, but simply because it would have taken considerably more of my time—more than I think it's worth.

In other words, a host of useful, but previously too costly, solutions is becoming affordable with these tools. Not just fancy products by trendy startups, but one-of-a-kind apps ideated, created, and consumed by you only, nearly for free.

I never planned to have AI make statistics and games out of my punctuation mistakes: I just suddenly realized that it was a low-hanging fruit right there, waiting for me (and no one else) to pick it. It's the era of applications as home-cooked meals.

The tooling for these things is getting better every month. Today, this kind of solution still needs a modicum of technical and programming skills, but I expect the situation to be quite different one year down the road. ●

Cover image:

Children Teaching a Cat to Read, Jan Steen