Human Language Is Not a Network

A visual explanation

Marco Giancotti,

_Maurice_Pillard_Verneuil.webp&w=3840&q=75)

Marco Giancotti,

Cover image:

Cacatoës et magnolia, bordure. Souris blanches. (1897), Maurice Pillard Verneuil

What my eyes saw was simultaneous; what I shall write is successive, because language is successive. Something of it, though, I will capture.

— The Aleph, Jorge Luis Borges

For all the fancy things you can do with words, the one thing you can't do is speak more than one word at once. Voice is mono. Every text is the storied coiling of a single string of letters: all lines in a book are turns of the same necklace.

Here lies the impossible challenge of language: the universe is a network, a seamless mesh of things that interact at the same time, at all scales, and in three dimensions. How does one even begin to represent that with a serial succession of words?

Even a picture like this—itself an enormous reduction of the actual place and events that it portrays—presents all sorts of challenges when you try to put it to words:

This is how GPT-4o describes it:

A natural scene in what appears to be a savanna or grassland environment. In the foreground, there are two rhinoceroses walking across a dirt path. The larger rhinoceros is likely an adult, while the smaller one is a juvenile. Both animals have thick, grayish skin and prominent horns on their noses.

In the background, there are more rhinoceroses and a herd of antelopes, possibly impalas, grazing or moving through the landscape. The vegetation consists of dry grasses and scattered trees with autumn-colored leaves.

The scene is set against a backdrop of rolling hills or low mountains, covered in green vegetation, which contrasts with the dry foreground. The sky is overcast.

Loosely speaking (this isn't a rigorous analysis) the verbs in bold red tell us what is there: they pick some aspects of the picture and assign names—boundaries—to them. These are copular verbs like is, are, and appear, and other linking verbs like have and consist of. Adjectives and adjectival phrases "customize" the general categories mentioned.

Most of the sentences in this description are like that: mere statements about what's there. This is typical for descriptions of static things, less typical for real-life scenes, where we usually need "active" verbs—verbs representing interactions. In the photo above, only a few verbs are active, and for those I've marked the whole sentences in yellow:

- two rhinoceroses walking across a dirt path

- a herd of antelopes grazing

- green vegetation, which contrasts with the dry foreground

They tell not merely what's there, but what their relationships are—explicit or implicit:

- Rhinos → path

- Antelopes → (implied grass)

- Vegetation → foreground

But there are a million interactions in the scene that aren't mentioned in this text:

- Animals breathing air

- Antelopes listening for signs of predators

- Flies walking on rhino skin

- Leaves turning sunlight into chemical energy

- Branches holding leaves up

- Wind blowing up fine dust

- Jupiter pulling at all of them gravitationally

- ...

Notice also how the text works in little bursts. It picks out a specific part of the picture first, tells us something about it, then it "jumps" to another part of the picture, or it adds another burst of information about something it mentioned before. This feels entirely natural, but only because of habit. No such bursts exist in the scene being described. So why does language have to be like this?

Network Wrangling

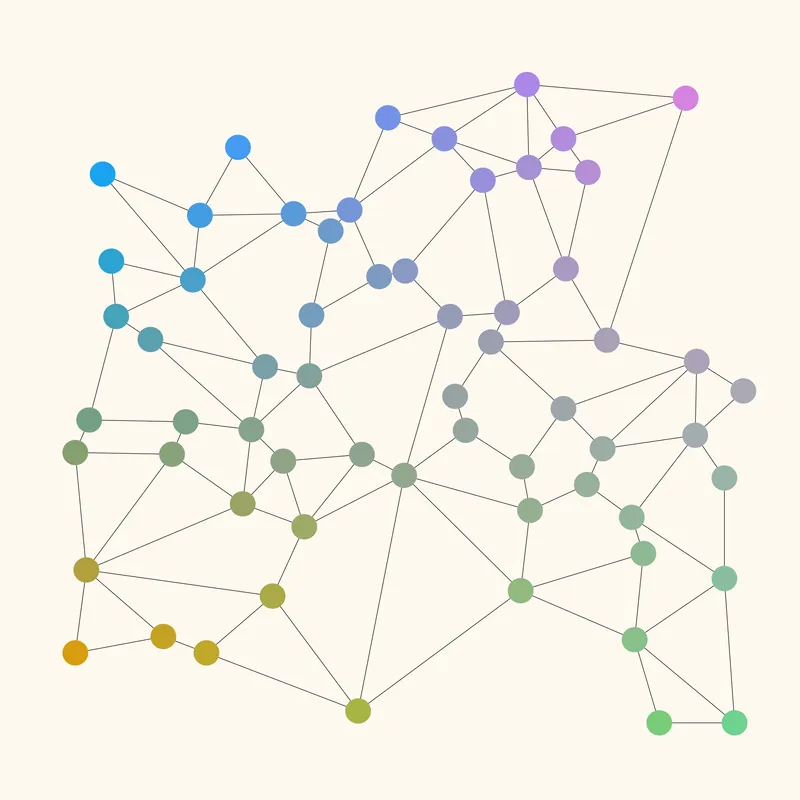

Before we put it into words, the scene is a network of inter-relations, something like this:

This is of course an enormous simplification—there are many more moving parts in the picture, each with many more "links" for interactions with other parts. But it works well enough to show the operation of language.

As you can see from this first drawing, the nodes (the objects we give names to in the picture) have lots of relationships between themselves, an they're also linked with relationships to other things outside the frame. We're looking at a small patch of "nodes" in the seamless network that is the Universe.

The colors here have no deep meaning, except to show that there is a gentle continuity between things to begin with. You could see the transition between colors as a fuzzy boundary that we make precise by assigning names and categories to things. For example, certain shades of blue might correspond with what, in the savanna photo, we call a rhino, and purple with an antelope. We'll return to the colors later on.

Immediately we face the main obstacle we started with: we can only speak or write one word at a time. In other words, language is one-dimensional, while the world is multidimensional.

Computer scientists have found two ways to "serialize"—convert into a sequence—the complex information of a network. The first is called an adjacency matrix. This is a two-by-two matrix where each column and row corresponds to one of the node labels. The cell of intersection between a given row and column in the matrix indicates if there is a connection between them or not. Wikipedia gives the example of this simple network:

The corresponding adjacency matrix is:

| a | b | c | |

|---|---|---|---|

| a | 0 | 1 | 1 |

| b | 1 | 0 | 1 |

| c | 1 | 1 | 0 |

You can read this as

- a has zero connections with a

- a has one connection with b

- etc.

This is fine for a computer, because a computer is built to work with data structures just like that, but we humans have a hard time thinking in matrix terms.

The next mathematically-proven method to serialize a network is called an adjacency list. It consists in giving a label to each node, then listing for each label what other labels it is connected to. For the three-node example above:

| node | relation | connected nodes |

|---|---|---|

| a | adjacent to | b, c |

| b | adjacent to | a, c |

| c | adjacent to | a, b |

This seems more approachable. When you say "two rhinoceroses walking across a dirt path", you're making the equivalent of an adjacency list item:

| node | relation | connected nodes |

|---|---|---|

| two rhinos | walking | dirt path |

Like adjacency matrices, adjacency lists are extremely versatile, and capable of expressing exactly even enormously complex networks. They are "lossless". They are manageable by computers, because of their high speed and extensible, exact memory.

Unfortunately, we poor human beings are neither fast nor exact with our memory. Our capacity to retain detailed information is "lossy" and short term. Even though we seem to apply something like the adjacency list method, we can't keep long lists clearly in mind, so when the network at hand becomes too large, we tend to forget what we said or heard before.

So we're forced to do a lot of pruning and selecting.

Since we can't describe the whole Universe every time, the first thing we do is pretend that it's an isolated network—we forget the rest of the universe for a whole:

Note how the network is nor isolated, disconnected from the broader network it was part of in the previous image.

That makes things much easier, but it's still too much. Every tiny point in the savanna picture (or any other picture, or anything else we might ever want to describe in life) can have its own relationships and interactions with the things around it. In the previous step we've removed the problem of the infinitely large surrounding Universe, but we still have the problem of the infinitely small.

To solve that, we pick out only the tiny minority of things that seem relevant or salient for the current communication, and ignore the rest:

The network is now much more manageable. The number of objects is something we can work with, something we can keep in our human heads. Still, we can do better:

We ignore (the double line marks) a lot of the relationships between the salient objects that we've noticed. Some of these we ignore because they are obvious, and the listener will be able to fill in on their own. Others are less obvious but, hopefully, irrelevant. In the photo, it could be things like "the larger rhino is grunting at the juvenile rhino", or "the 10th antelope from the right is scratching its own leg". Probably not the most important things to mention.

So we end up with a vastly simplified, skinny network like this:

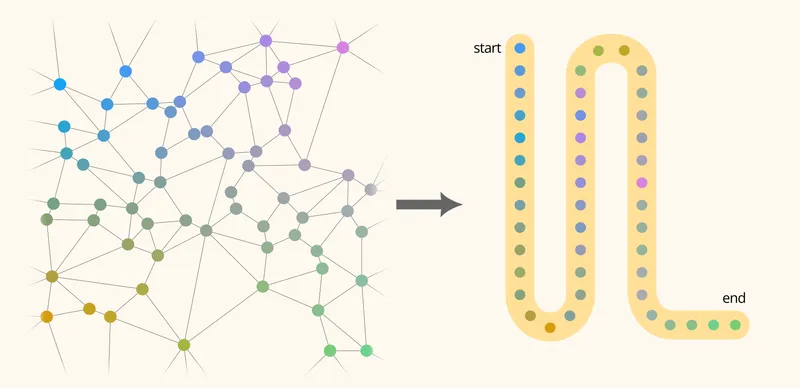

At this point we've ignored all we could ignore without seriously damaging the message. All we have to do is knead the remaining structure a little, mold it into a simpler shape, even out the distances...

...and wrench it into a line, as if we were manipulating a metal wire:

Here we've kept the salient relations intact, and only stretched and compressed them so that everything forms a single file.

Note how we're forced to introduce jumps in certain points, so that we can go back and mention additional links that went parallel to what we were saying before.

In some cases, the jumps return to things mentioned quite a while before. You see this by the sudden change of color across some of those jumps—yellow to greenish, blue to purple to green, and so on. These are entirely artificial discontinuities, artifacts of our shoehorning work, and they didn't exist in the original, smoothly gradated network.

What we end up with is a neat line of words, with a beginning and an end, that our gray matter can comfortably chew on, re-converting it into something resembling the original network in the form of an internal model.

At last we've reached a shape that we can put into language.

A sight, an emotion, creates this wave in the mind, long before it makes words to fit it; and in writing (such is my present belief) one has to recapture this, and set this working (which has nothing apparently to do with words) and then, as it breaks and tumbles in the mind, it makes words to fit it.

— Virginia Woolf, from her diary

What's Gained and What's Lost

Every news reel and public speech, every novel and self-help blog and progress report, every written or spoken or gestured word is the result of this wrenching process.

If language was invented (it probably wasn't), the inventor was a genius. The fact that we can compress and reformat the infinitely intricate and multi-dimensional reality that surrounds us enough to transmit it with the vibration of vocal cords and finger movements is almost a miracle. That we can put to good use the information so packaged to predict and understand the world is mind-boggling. Maybe we should speak of the unreasonable effectiveness of language.

We should not become complacent, though. We're making this bewildering transformation for convenience:

Much is lost in the act. Intentionally or not, we ignore the majority of nodes (objects) and relationships that exist in reality; by streaming our words one after the other, we also lose information about the distance between those nodes, and how strongly they interact; we omit inner links based on subjective judgement; we gloss over the three- (or many-) dimensional structure of the thing we describe.

And—perhaps most importantly—we get the impression that the Universe has neat boundaries and jumps, fits and starts, instead of being a continuous mesh of interactions.

Language makes our society possible, but it also inevitably distorts the way we perceive and interpret reality. Sometimes this is good enough, and sometimes, like in poetry and prose, it might even be desirable. But when the goal is a deep understanding of things, language works against us. That's why it's so important to understand its weaknesses and constraints. Like any tool, we need to become intimately familiar with it. ●

Cover image:

Cacatoës et magnolia, bordure. Souris blanches. (1897), Maurice Pillard Verneuil

_Maurice_Pillard_Verneuil.jpg)