Replaced

What happened when I let an LLM pretend to be me

Marco Giancotti,

Marco Giancotti,

Cover image:

Photo by Evan Yang, Unsplash

If you've been following Aether Mug for more than seven days, you might have read the blog post I wrote last week. It's titled Meditations on the Color of Pee, and it's about a small logical contradiction I noticed in what is considered a healthy amount of water to drink in a day, and what that means for the standard color of urine "should" be. Not a very serious or important topic, to be honest, but one I've found myself returning to over and over in my mind.

Except I just lied: I didn't write that blog post! A large language model did. Last week I was very short on time and, considering how long it takes me to write my usual stuff, I realized I might not be able to publish anything decent at all. The idea of using AI crossed my mind. Although the prospect of sitting back while a machine writes in my stead never attracted me, I figured that maybe doing it once could turn out to be an interesting experiment. And "the color of pee," while admittedly a bit gross, was the perfect topic to try: something I was mildly intrigued by, but not big or important enough to spend a long time developing.

The choice was between having AI slop it out for me or leaving the blog silent for a week, and I chose the former.

At least in terms of the time it took me, it was a success. In terms of quality, I'll share some of my thoughts below. But first: if you read it without knowing it was AI-generated, did you notice anything strange? Did it sound different from my usual writing, and was it all logically sound and interesting? Shoot me an email or a Bluesky/Twitter message with your impressions to let me know.

(To be fair, I didn't really hide the fact that it was generated: the author attribution on that page clearly states that "Anthropic Claude"—Sonnet 3.7, to be precise—was the actual writer, with ideas and editing by me; even the subtitle of the post hinted at this fact!)

How I Did It

Although AI slop blogs abound, you don't often get to see the process behind the scenes. For posterity, I'll share the approach I used for last week's blog post here.

It was my first time using an LLM for "creative nonfiction" writing, so this process is probably not the best or most efficient. Still, I use language models on a daily basis for other work tasks, including coding and drafting corporate documents, project proposals, and executive memos, and I'm quite confident with the overall method.

Step One: Think

Make what you want with this piece of information, but I had been wondering about the evolutionary and cultural implications of the color of pee for years. I created a note about it in my Obsidian vault in February 2024, but by then my thought process about it was already well formed. It wasn't fully spelled out, though, nor structured in a way that would be easy to follow for a generic blog reader, which is why I had to revisit the topic and massage it into a prompt last week.

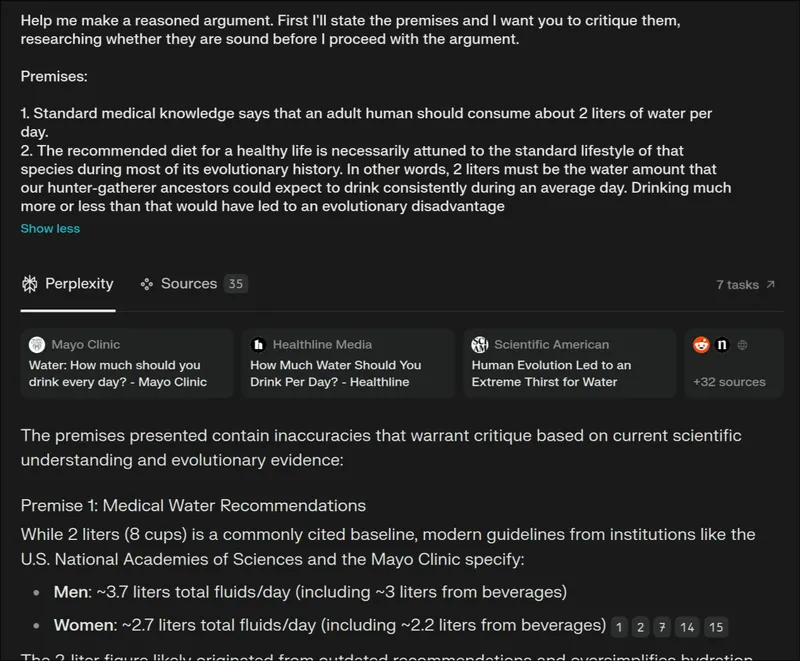

Step Two: Stress Testing

It's tempting, when you have a convincing argument in mind, to jump straight into writing, but of course rationality fails at the edge. My assumptions could be wrong, in which case no argument, no matter how elegant or acrobatic, would be able to save my conclusions.

So I sat down with Perplexity AI and had a critical conversation with a few reasoning models (mostly Deepseek R1 and Claude Sonnet 3.7 Thinking).

While this method isn't as good as bouncing ideas off a scientist or a highly educated friend, it works quite well and it's great for when you're on your own. I did a lot of back-and-forth this way, pushing back on some of the AI's arguments, and prodding it to strengthen its points when it went on irrelevant tangents.

One advantage of using a search-enabled LLM for this kind of self-critiquing is that it will give you its references. You can, and should, double-check these to make sure the sources are reliable and actually say what the AI claims they say (they usually do, but sometimes have added caveats).

After about 30 minutes of this, I had a revised, more science-informed understanding of the topic. I realized there was more nuance to the question of urine colors, and included that nuance in the following steps.

Note that I didn't take any of what the AI told me as gospel: I had to pick and choose the bits and arguments that were both relevant and meaningful (for or against my initial ideas) from a lot of useless stuff it offered me. This is a good skill to develop, with applications that go well beyond prompting.

Step Three: Outline

Based on my existing notes and on the insights I gained with the chatbot stress test, I created a very quick and rough outline of my ideas: what I found puzzling, what new nuance I had obtained in my brief research, and my thoughts about it.

Step Four: Context

Finally, I fired up Cursor—the AI-enabled code editor I use to write Aether Mug—and started a conversation with Claude Sonnet 3.7 Thinking. Before asking it to write the post, though, I gave it a lot of context to start from: the outline I had created, of course, but also the full text of an older post I'd written, as a style reference.

With all that background information ready, I asked Claude to write a blog post imitating my style, based on my "Color of Pee" outline, and of course it did it in a few seconds.

Step Five: Become an Editor

Claude wrote a first version, then revised it several times based on my comments. All I did was read the piece critically, select certain passages, and give it feedback via prompts. For example, at one point it wrote that a source I had discovered was a "surprisingly interesting rabbit hole," to which I responded:

This sentence ("surprisingly interesting") is exaggerated. Tone it down. It's not really a rabbit hole, just a small revision of my interpretation.

I also didn't like where its conclusions went, so I gave it a prompt starting with:

Change the conclusions in the last section of the blog post. They should focus on these things: ... [the points that made it in the final version]

Since this was an experiment, I made sure not to directly write a single word of the blog post (with the exception of the title, which I chose). It was all prompting: expand on this, shorten that, cut that other part off, and so on. If I were to do this regularly, I think I would directly edit certain portions myself.

Its Proper Place

Why this approach? It's something I developed over the past years as I went through several phases in my relationship with generative AI.

When ChatGPT first came out, I was enthralled by it and talked about it enthusiastically with anyone who would listen (for some reason, few of them were excited about it back then). Of course, at the time it was little more than an over-hyped toy, and I lost interest in it for a while.

Then the models got better and better, and I started using them for coding tasks and web searches. I gradually fell into what you could call a trough of laziness, where I relied more and more on the LLMs to do things for me, to the point of becoming worried about atrophying my thinking abilities. Was I offloading too much to this new technology?

The answer was yes. I was using LLMs wrong—as if they were consultants I could delegate my hard work to. I was so bought into the dream of sitting back and having instant magic happen for me that I often stopped thinking. This, of course, led to mistakes and time wasted trying to get it to fix its mess. But even when it did spit out correct answers, I was a mere spectator, and I began to fear I would develop an unhealthy dependence on that magic.

I don't know exactly what triggered a change, but one day I realized that I was (mostly) out of the trough. Maybe I read some prompting advice that gave me a better framing of the whole matter (needless to say, my prompting approach isn't original or anything). Maybe it was just wisdom acquired through my daily trial and error. In any case, at some point I started seeing the AI not as a consultant, but as a young, smart, and very fast assistant (or secretary, or intern) who nevertheless lacks real-world experience and common sense.

It needs guidance, it needs nudges, and it often needs even extremely basic context spelled out for it.

Most importantly, you don't use an assistant's work as-is, without critical review and editing—whether the assistant is human or not. When you pass on or employ an assistant's output in your work, you're not allowed to blame them for any mistakes. If the output is good, great! You've achieved something you aimed for. If it's crap, though, it's your fault for not supporting the assistant better, and it's your responsibility to discard it and try something else.

Once I started seeing things this way, the problem of "AI as a crutch" mostly disappeared. I started thinking hard about how to prompt the models well, predicting the various pitfalls and dead ends they might fall into and avoiding them with carefully chosen wording. For complex queries (and I do a lot of those), I often spend more than 15 minutes composing a single prompt! Most of the time, this effort pays off with high-quality, deep responses that, all considered, speed up my work enormously. If anything, lately I've felt that the very act of crafting a good prompt has helped me think more clearly about the subject.

The approach I described above, where I spent time in an intense conversation with a language model and then looked for the right context to use as reference, is based on the AI-as-assistant framing. I could have directly asked Claude to "write a blog post about the ideal color of urine compared to the cultural stereotype of that color, in a lighthearted and pop-science style," and it would have done so in a fraction of the time it actually took me. But I can guarantee you, it would have produced something much, much worse—mildly informative, perhaps, but more boring and shallow. (In fact, I just tried that prompt and the answer was... I'll spare you the pee-related pun that crossed my mind.)

Deeply Sloppy

Alright, but how good was the blog post that came out of this elaborate process? I hope many readers will share their thoughts on this with me, but here are mine.

The blog post that came out wasn't very good. In fact, I think it was rather poor. I mean, from one point of view it's nothing short of magic, and I would have been astonished by this feat only four years ago. But when judging it as an Aether Mug post, it was not great. At least—boy, how I hope you'll agree!—it was worse than most AeMug posts.

In the spirit of taking responsibility: sorry! Last week, I published what I considered to be a sub-par blog post. One lesson I learned is that there is a limit to how much an editor can improve a piece, short of rewriting it entirely. But since I intended, all along, to dissect the experience in this follow-up post, I hope you'll forgive me.

Let's start with the things Claude did relatively well. It did replicate many of the patterns and idiosyncrasies of my own writing style: short paragraphs, informal tone, ample use of parentheses and self-posed questions, and things like that. In this sense, I was impressed (and not a little creeped out—similar to when I listen to my own recorded voice).

The language model also structured the text more or less as I would have, breaking it into sections with titles of a similar flavor to those I author. I'm not sure how much credit the AI deserves for this, though, since I did give it a loosely structured but complete outline to work from. All in all, I'd say it looks, at first glance, like a Marco post.

Dig even a little beneath the surface attributes of the text, though, and the illusion comes crumbling down. Some of its choices of expression felt cringey to me, like the cheesy "...the whole performance" and the reader-directed command, "think about it" (I generally avoid expressions like these because they can sound condescending).

And let's not talk about the awkward attempts at humor. Hey, I'm not saying that my own humor is brilliant or particularly witty, but I think I can do better than "a little nuance can really flush away our most golden assumptions."

As for the part where it says, "of course, my initial thoughts needed some correction (they usually do)," I see what it's doing. I guess it's a passable imitation of what I might have written—although I think I prefer it when self-deprecation comes from me.

The main reason I say that the post was of poor quality, though, lies in its logical flow. The argument is wobbly: first, it says in the "Reality Checks and Nuances" section that we don't have proof that people really believe "deep yellow" is the natural color of urine, then it concludes that we should be careful not to believe such a thing. There is a missing link there—an underdeveloped point about the influence of media on people's thinking patterns (or framings), and perhaps a missing concrete example or two. The way it is laid out in the published post, this hole is not glaring enough to destroy the whole argument, but the non sequitur does weaken its thesis. Are we justified in caring about the way pee is depicted in cartoons and illustrations? I don't think the piece makes a convincing case for that.

Then again, the original concept I prepared might have been too weak to begin with, so I still can't fully dump the responsibility onto the machine assistant. Perhaps another lesson to be learned is that you have to do the work of writing the whole thing yourself in order to confirm whether it is publish-worthy.

My Takeaways

A state-of-the-art LLM was unable to write a strong, deeply reasoned, and coherent blog post, even when given a backbone and plenty of reference materials. It's possible that other recent models (perhaps Gemini 2.5 Pro?) might do a somewhat better job at this, but I don't have high expectations for the time being. A good blog post—at least the kind of blog post I like—is more than a sequence of facts laid out in passable prose. It needs to convey a... zest, a charged intent while also being held back by tics and idiosyncrasies to some extent, revealing a struggle—an effort by the author to push beyond their own expressive abilities. In other words, it needs to reveal the presence of a human behind the scenes.

I'm not saying that LLMs will never be able to write blog posts. They already can, and they already do. There has always been a humongous amount of human-made "content" slop, and AI is already more than good enough to replace that (I hear that content mills are going extinct). My claim is that LLMs can't write a good blog post, under my definition, yet. Surely not from scratch, and apparently not even when given a human-crafted seed like I did this time.

What about future versions? I think it is entirely possible that generative AI will become so good as to be able to write a great blog post, as long as it is closely guided and supervised by a human. Maybe we're not far from the day when an original, zesty human writer will be able to produce blog posts and essays of the same quality as before, but at five or ten times their unaided rate. With LLMs as amplifiers of their skills—as opposed to being replacements—future writers may be able to share their humanity, all their original and hard-earned insights, much more efficiently.

When that day comes, maybe I'll take advantage of this new tool. Maybe not—I haven't quite made up my mind. For now, I will stick to typing each word myself, even at the cost of being very slow at it. ●

Cover image:

Photo by Evan Yang, Unsplash