Process World, Object-Oriented Mind

How programmers' struggles are everyone's mental struggles

Marco Giancotti,

Marco Giancotti,

Cover image:

Young woman at a table, ‘Poudre de riz’ (detail), Henri de Toulouse-Lautrec

A couple of weeks ago I shared an attempt at visualizing framings and models, the basic tools of all human reasoning. I ended up with a kind of diagram that looks very much like good ole' UML.

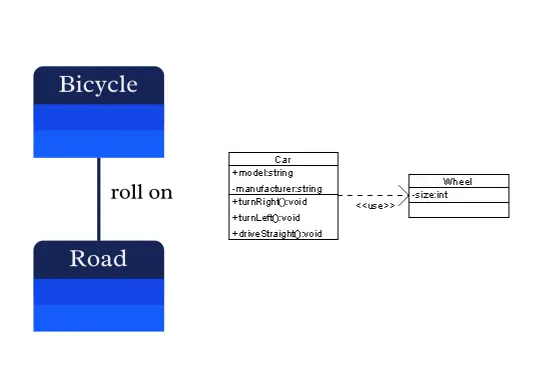

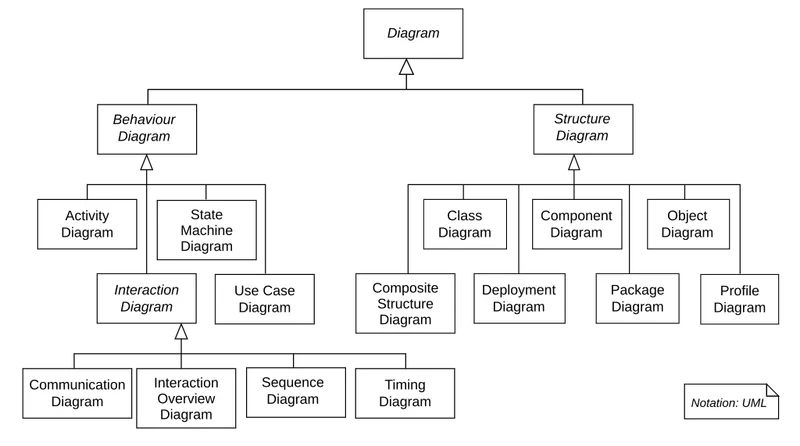

UML stands for Unified Modeling Language, and it is a set of standardized diagram types designed to prototype and communicate the most common software concepts, from architectural structures to process sequences. There are many kinds of diagrams in UML, but one of the most popular is what's called "class diagrams", of which the right side of the image above is an example.

Class diagrams are based on the idea that you can divide the world up into things called "classes", which are basically general categories of things, like Person and Job. These classes have their own properties and behaviors, and can interact with each other. For example, the diagram may show that every object in the Person class may have at most one link to an object in the Job class. Define more classes and more interactions, and you can model basically anything you want with your code.

This sounds exactly like what I've been calling framings and models here on Aether Mug! Except I wasn't talking about software but about the human mind.

When I first noticed this parallel, I thought I might have simply defaulted to a familiar thinking paradigm without even realizing it. A sort of unconscious borrowing. This is probably true in the inspiration and the details but, thinking about it more, I think the parallel runs much deeper than that.

The interesting question is why engineers trying to build better software programs would discover an ideal way to represent the human mind.

The Rise of the Object-Oriented

For a long time since the early days of computer programming, code usually took the form of lists of instructions, like this:

- Start with a total of 0.

- For each price in a given list of prices, add that price to the total.

- Once you've gone through the whole list, return the final total number, which is the tally of all prices.

This so-called procedural approach worked perfectly fine for small programs like that, but it ran into several issues for larger and more complex applications. The more moving parts you have, the more your procedural code becomes a spaghetti tangle of variables being passed around and hard-to-track operations. Often you wanted to do a very similar operation again, and you had to duplicate the same code with small variations, bloating the program. You had a high risk of losing track of the details and introducing more bugs.

What emerged as a solution to those problems was the object-oriented programming paradigm, or OOP. This was a remarkably different way to think about the code.

Instead of dry data being manipulated a step at a time, with OOP you identify the key entities (or classes), what their structure is, and how they behave. These classes usually have real-world names like Person and Job, and they are precisely what those UML diagrams (which historically came later) are meant to represent. A basic program of this kind would work like this:

- Define a class called ShoppingCart.

- The ShoppingCart starts with an empty list of items with prices.

- The ShoppingCart has the ability to add items with prices to it.

- The ShoppingCart has the ability to compute the total sum of the prices it contains.

- Create an object of the class ShoppingCart.

- Add three items to it with its predefined ability to add items.

- Return the total price of the ShoppingCart using its predefined ability to tally up its prices.

I think you will agree that this feels less abstract and dry than the procedural example, even though it is doing essentially the same thing. (The object-oriented program is still procedural at its core, because it indicates what needs to be done at each step, but it clumps things together differently.)

You're not just "adding X to Y" any more, you're using the "abilities" of objects to do things. Of course there is much more to the object-oriented paradigm than dividing things into classes, but it is this real-world intuitiveness that, I think, led to its taking over the software world.

The theoretical roots of OOP date back to the 1950s and 1960s, but it really began to spread in the late 1970s. The 1990s, when the technology supporting it had been refined enough, saw a tidal wave of hype and excitement about the object-oriented way of coding. Countless books were written about it, more and more languages supported it, and it was clear to most that it was the future of software, and that the purely procedural way of doing things was going extinct.

Why this popularity? Apparently, OO code felt easier and more powerful. There is a surprising amount of psychological and educational research from that era showing its strengths. Among other things, it makes people much faster at sketching out the initial structure of the programs and imagining how it might work, and gives experienced programmers more time to test and evaluate various approaches in practice.

In other words, OOP seems to tap into some of the pre-existing wiring of our minds better than the older approaches did. This, of course, was not an accident. The scientific notion that people tend to think in terms of discrete objects and agents with behaviors and interactions is always cited as one of the main reasons and strengths of this paradigm.

That's the theory.

Objects Disappoint

Today, the object-oriented paradigm is not considered to be "the future" anymore. Don't get me wrong, OOP is still wildly popular, being at the root of major programming languages like Java, C++, and C#. It isn't going anywhere, but it is certainly considered to be declining in popularity. Many of the top languages today support other paradigms either exclusively or as an option. New languages are appearing that return to the old procedural approach, or to a very different approach called functional programming (more on this below). What is causing this loss of popularity? I find this question to be very revealing about human nature.

As far as I can tell from the research on this question, there are two major categories of problems with the object-oriented view of code.

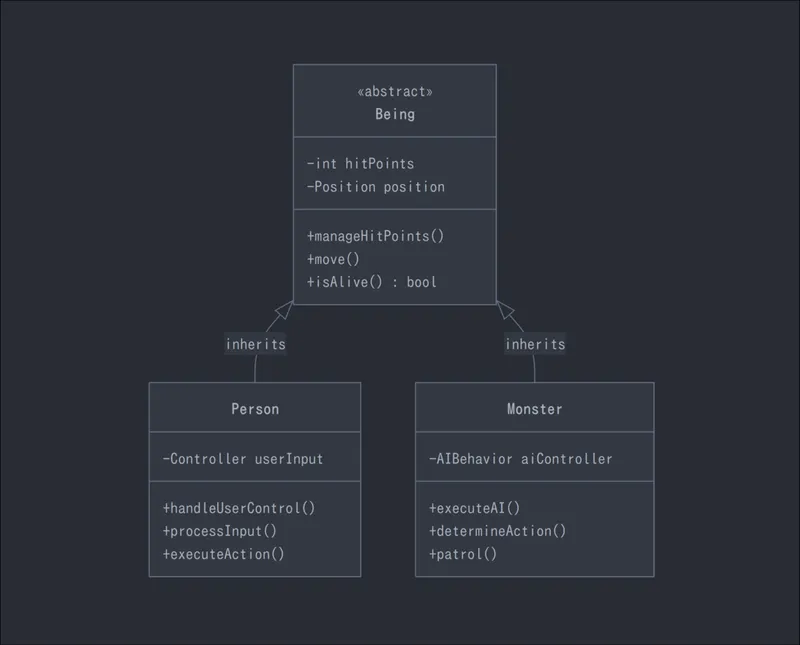

First, some aspects of OOP feel counterintuitive. A key feature of classes in OOP is the ability to create abstractions, that is, to define a "genealogy" of inheritance from more generic to more specific classes. For example, in a videogame where players battle monsters, the class Person may be defined as the "child" of a more abstract class called Being, which is also the parent of the Monster class. Being would be used to define all of the traits in common between all children, like the management of their hit points, their ability to move, etc. The sibling concrete classes Person and Monster, on the other hand, would each define characteristics specific to themselves only—the handling of user control for Person objects, for example, and autonomous AI behavior in the Monster objects.

In simple use cases with few moving parts, people have no trouble with such inheritance patterns. But when the problems get more complex, and the solution requires deep hierarchies and many kinds of interactions, people find it increasingly difficult to figure out the best way to define classes and their family trees. Indeed, they find it easier to think in the supposedly-outdated procedural style—despite its spaghettification risk!—than in terms of classes.

The second kind of problem people encounter with OOP is that it simply isn't always the best way to think about a problem. Some tasks are inherently about processes happening more than they are about entities doing things. For those tasks, research has shown that people actually perform better with the procedural way of programming.

These two obstacles often lead to more bugs, harder-to-debug bugs, and headaches with certain kinds of problems, for example those involving parallel and distributed computation. We'll come back to these observations later.

From the perspective of many software engineers today, OOP has failed to deliver the amazing promises it made forty years ago. I don't think many of those engineers are against it 100% of the time, but there is a general understanding that it is simply a tool among others, and that there are many important cases in which it is not the right one. Some of them may tell you that it very rarely is the right one.

Wait a minute. Wasn't OOP successful because it is grounded in the way we humans think—what I call framings, models, and their black boxes? Is there something even better taking its place?

A Humble Functional Answer

Another paradigm is recently emerging in contrast with object-oriented programming, with a radically different approach. It is called functional programming and, as the name implies, it deals with functions, not objects.

A functional programming (FP) language would implement the shopping cart example as follows:

- Define a function that takes a list of numbers and recursively adds them up one at a time until it has gone through the whole list.

- Input a list of prices into the function.

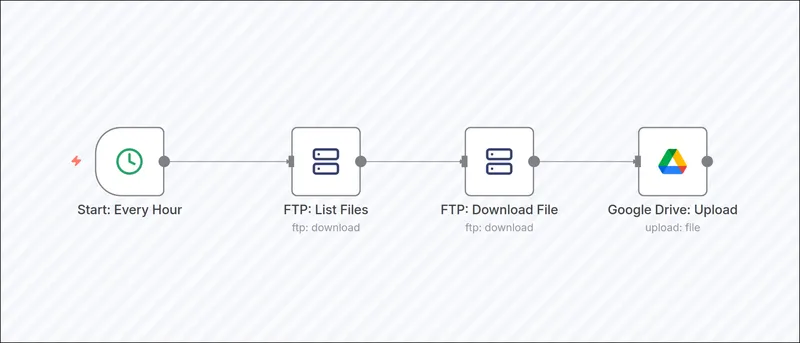

Unlike OOP, functional programming lacks concrete objects that "exist" and "do", and instead works with pipelines through which data is transformed.

(The simple example above may look similar to the procedural case, but it has some key differences that avoid many of its usual pitfalls. Instead of giving a list of instructions like "do this, then do this, then that", with FP you define what transformations are possible, then you see what happens to the data that you put in. The functional mindset is like building an automated assembly line, while the procedural mindset is like training a team of (very fast) assembly-line workers with the steps they have to execute.)

In general, FP leads to fewer bugs, and it works better with distributed systems and parallel processing compared to the other paradigms. And I don't think this is a coincidence.

The real world is a continuous network of interactions, and the currency being transferred and transformed through it is differences (what some call "information"). Boundaries are in the eye of the beholder: the things we tend to see as separate objects aren't really that separate after all. From this perspective, it seems to me that the functional paradigm is a much better way to represent the real world around us: everything is transformation, everything is "pipeline", nothing persists. What we call the "state" of something is just a snapshot of an ever-changing process, even when it is changing very slowly.

Like most of these approaches, FP has a long history, but it only recently started going mainstream. Many big programming languages, like Python, Javascript, and Ruby, provide the tooling to code in the FP way. Even FP-only languages like Clojure and Scala have their relatively small but passionate communities of users. It may seem like the functional way might be the way of the future.

Sadly—because I love FP more than any other paradigm—I don't think FP is the future. Things are more nuanced than that.

Problem is, FP is hard! It is much less intuitive to design with than OOP, and arguably less so than even the plain procedural style. Thinking in terms of functions is not what we usually do in our heads. You don't generally look at a shopping cart and say, "that is a useful product-aggregation process right there, son." You look at the cart and say, "oh, a cart, the thing you put products in."

Our reason is fundamentally concept-based, black boxes with boundaries drawn as and when needed to make specific predictions. The virtual physics in our minds needs discrete building blocks to think about anything.

Even if I'm right—even if FP best reflects the world's incessant and borderless transformation of differences—functions are never going to be the only paradigm for software, and perhaps they will never even be the major one either. Our minds won't let them. Even saying "the function transforms the data" is describing a functional truth in an object-oriented language—English—where "functions" and "data" are black boxes. The same goes for "the world is a network." We just can't help it.

Living with the Tension

I love software for how it regularly leads to questions much deeper and more interesting than "how do I automate the checkout of my e-commerce website". On the surface it is "just" engineering, but it is really philosophy, psychology, and more rolled into one.

Remember that the first category of mistakes attributed to OOP had to do with abstractions and inheritance. This seems to be the part of the OOP method that least reflects our natural way of framing the world. While we do think "in objects", we always use them mentally in concrete, goal-oriented tasks, where the horizontal relationships between objects are what matters (e.g. "the person fights the monster").

To think about inheritance, on the other hand, you need to think "vertically", about the levels of abstraction of the categories involved ("a person is a being, and traits X and Y are shared by all beings"). We can do this—it is an important and useful effort—but it's not what our minds are best at.

The second kind of cognitive issue with OOP had to do with cases in which objects are just a bad fit for the problems. Here is where procedural and, even better, functional methods shine: not because they don't apply just as nicely in other cases, but because these are the circumstances in which the object-oriented fictions in our minds break down in the face of reality.

In short:

- Object-orientation is declining in popularity and people see it as flawed not (mainly) because it doesn't reflect how our minds work, but because our minds don't work very well.

- Functional code is not more popular, and perhaps will never be, not because it is a worse model of reality, but because it is alien to how our minds tend to work.

What we are left with is an awkward mixture of both paradigms, both in software and in life.

When programming, I'm afraid we can't hope for a single neat paradigm to rule them all, a way of designing code that is both intuitive and world-accurate. We (programmers) have to find the right tool for each case, learn to discern in which situations the cognitive benefits of thinking in objects outweigh the technical downsides of less-functional code, and vice versa. Even better, we can use both at the same time and trade elegance for pragmatism.

When reasoning in general, outside the narrow world of software, I think the same wisdom applies. Knowing the limits of your framings and the failure modes of your models can only be good for you.

It may be worth the effort to borrow ideas from visualization tools like UML to represent what goes on in our minds—especially what goes wrong. Make this intuitive process of framing everything into something more deliberate when necessary. Design your understanding of the world like an engineer designs his programs. ●

Cover image:

Young woman at a table, ‘Poudre de riz’ (detail), Henri de Toulouse-Lautrec